MARCH 2024 I Volume 45, Issue 1

Decision-Supporting Capability Evaluation throughout the Capability Development Lifecycle

MARCH 2024

Volume 45 I Issue 1

IN THIS JOURNAL:

- Issue at a Glance

- Chairman’s Message

Book Reviews

- Book Review of Systems Engineering for the Digital Age: Practitioner Perspectives

Technical Articles

- Towards Multi-Fidelity Test and Evaluation of Artificial Intelligence and Machine Learning-Based Systems

- Developing AI Trust: From Theory to Testing and the Myths in Between

- Digital Twin: A Quick Overview

- Transforming the Testing and Evaluation of Autonomous Multi-Agent Systems: Introducing In-Situ Testing via Distributed Ledger Technology

- Decision Supporting Capability Evaluation throughout the Capability Development Lifecycle

Workforce of the Future

- Developing Robot Morphology to Survive an Aerial Drop

News

- Association News

- Chapter News

- Corporate Member News

Decision-Supporting Capability Evaluation throughout the Capability Development Lifecycle

Abstract

Since 2020, the Integrated Decision Support Key (IDSK) has provided a single, integrated framework to guide data collection from live, virtual, constructive (LVC) test and modeling and simulation (M&S) events needed for a system’s operational and technical capabilities’ evaluation. The evaluation results inform the gamut of operational, programmatic, and technical decisions throughout a system’s acquisition lifecycle. Just as the Test and Evaluation as a Continuum (TEAAC) concept[ Collins, Christopher and Kenneth Senechal; Test and Evaluation as a Continuum; The Journal of Test & Evaluation, March 2023, Volume 44, Issue 1; Available online at: https://itea.org/journals/volume-44-1/test-and-evaluation-as-a-continuum/] has recently expanded T&E outside the strict confines of a system acquisition to the full capability lifecycle, the Decision Support Evaluation Framework (DSEF) expands the IDSK’s evaluation and decision-support outside the acquisition activity’s confines. The IDSK guides data collection throughout the acquisition lifecycle, while the DSEF guides data collection from the full continuum of wargames, exercises, experiments, demonstrations, and LVC/M&S events throughout the full capability delivery lifecycle. This capability lifecycle spans early concept capability development planning, laboratory technology maturation, prototype experimentation and demonstrations, to system development and operations within an Operational Enterprise or System-of Systems (SoS) Architecture. This article describes the technology development and operational fielding decision space and how the DSEF focuses evaluation and test to inform those decisions throughout the capability delivery continuum.

Motivation

The advancement and pace of adversary threat capabilities are driving necessary changes in the technologies being developed, the pace of their fielding, and the way we need to fight. Timely and targeted knowledge to inform decisions is essential to realizing combat value and to impact our national efforts to meet this challenge. The DSEF’s critical thought process of identifying key decision points, linking them with the capability evaluation needed to inform them, and building test designs to provide the data for evaluation will accelerate the design and execution of T&E and, thus, our capability development activities. In the March 2023 ITEA Journal’s article, Test and Evaluation as a Continuum,[ Ibid, Collins and Senechal] Mr Christopher Collins and Ken Senechal stated:

For the United States to maintain an advantage over our potential adversaries, we must make a critical change in how test and evaluation (T&E) supports capability delivery. Making this change requires a new paradigm in which T&E will inform today’s complex technology development and fielding decision space continually throughout the entire capability life cycle—from the earliest stages of mission engineering (ME) through operations and sustainment (O&S). This transformational shift will significantly strengthen the role of T&E in enabling critical warfighting capability delivery at the “speed of relevance.”

In their ITEA Journal article, Mr. Collins and Senechal described the entire capability life cycle – from ME through O&S – and how their TEAAC concept enables critical warfighter capability delivery. This article describes a key enabler of TEAAC – the Decision Support Evaluation Framework (DSEF). The DSEF is the capability evaluation-based decision support thought process the T&E community should use to develop test designs that focus on informing key decisions throughout the TEAAC and capability development lifecycle. This focused T&E design will accelerate capability development and delivery to counter ever-increasing adversary capabilities.

Keywords: integrated decision support key; live, virtual, constructive test; modeling and simulation; decision support evaluation framework

Background

For T&E to provide the most relevant and timely information for developing and fielding capabilities needed to meet mission needs, test planning should focus on gathering the data required for capability evaluation and decision-support. Specifically, a deliberate process to define decisions to be made, capabilities to be evaluated, and data source events (i.e., test, M&S, exercises) that generate the data for capability evaluation and, ultimately, decision support. The Integrated Decision Support Key (IDSK) combines Developmental T&E’s (DT&E’s) Developmental Evaluation Framework (DEF) and Operational T&E’s (OT&E’s) Operational Evaluation Framework (OEF) with the “decision space” forming a single decision-supporting evaluation framework. The critical thought process of articulating key decisions, the operational and technical capabilities to be evaluated to inform those decisions, and the test events to provide the data for evaluation are captured in an IDSK. The IDSK sets the stage for the program’s integrated decision-supporting evaluation and test plan. The IDSK describes how an acquisition Program of Record’s (PoR’s) technical, programmatic, and operational decisions will be informed with an evaluation of technical and operational capabilities using data generated from contractor, developmental, integrated, and operational test and M&S events throughout the acquisition lifecycle.

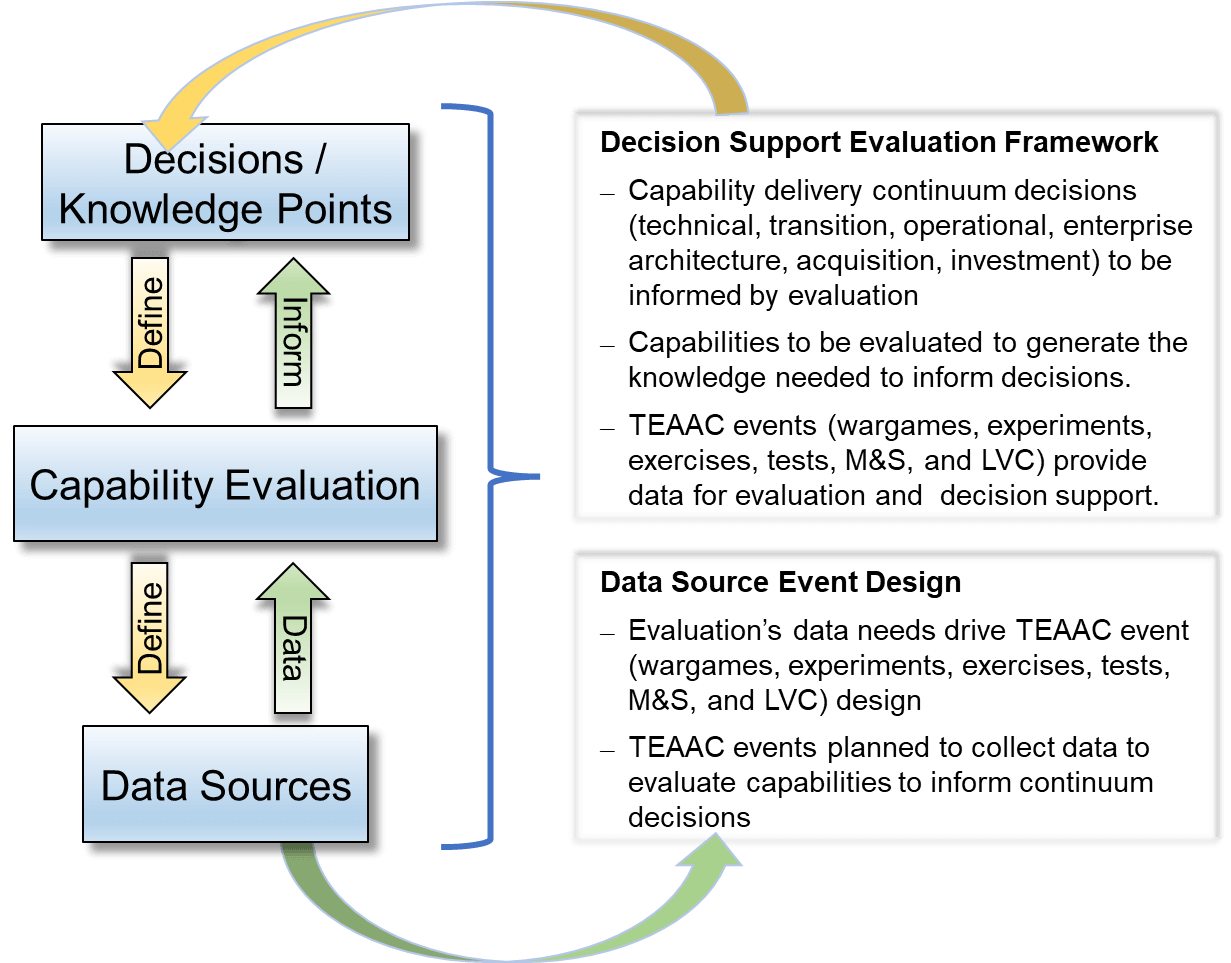

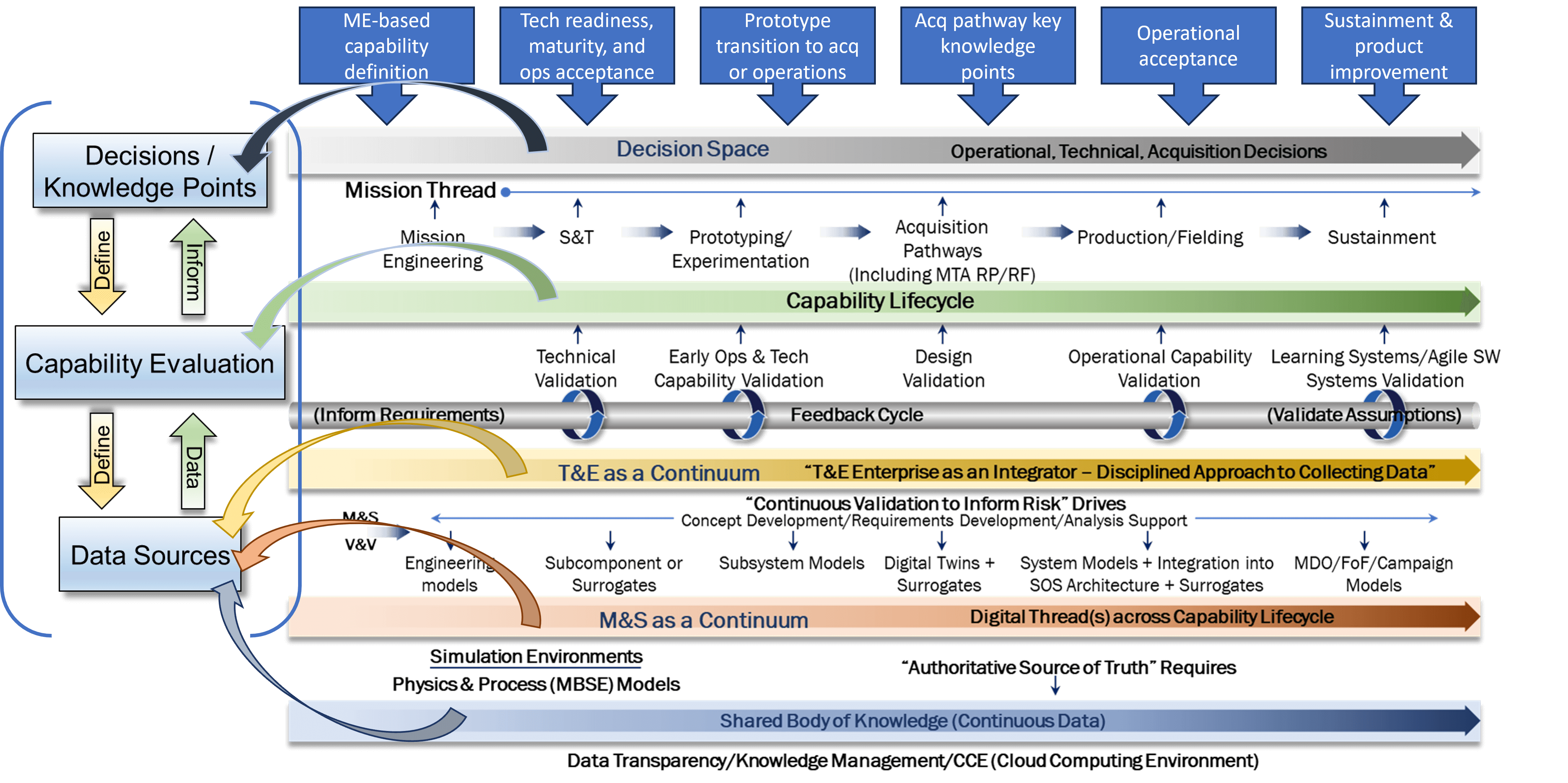

The Decision Support Evaluation Framework (DSEF) broadens the IDSK’s critical thought process. The DSEF expands the aperture beyond the IDSK PoR’s acquisition lifecycle view to the full capability delivery continuum. The capability delivery lifecycle precedes a PoR’s acquisition lifecycle with Capability Development Planning and Mission Engineering (ME) concept and technical solution definition, Science and Technology (S&T) technology development, Prototyping and Experimentation (P&E) product experimentation and refinement. The capability delivery lifecycle also expands the system’s contributions across a broader context (e.g., its place within an operational enterprise or a Program Executive Officer’s (PEO) capability portfolio). While we have described “standard” DoD phases that precede and follow a PoR acquisition lifecycle, the DSEF’s thought process of defining decisions – evaluation – data sources, illustrated in Figure 1, is equally applicable to any situation where decision-making will benefit from capability evaluation—for example, informing investments within an S&T portfolio or understanding the optimal design of an operational architecture.

Figure 1: Decision – Evaluation – Data Source thought process instantiated for the TEAAC with the DSEF

Informing Decisions with Capability Evaluation

When a decision can benefit from the knowledge gained through capability evaluation, the Decision Support Evaluation Framework (DSEF) can be called upon to focus evaluation strategies to inform those decisions. The DSEF’s decisions run the gamut from early concept tradeoffs and investments to sustainment and product improvements (e.g., early Capability Development Planning (CDP) tradeoffs and investments, S&T’s technology adoption, P&E’s prototype transition, program and enterprise architecture design, fielding, sustainment modifications) Operational and technical capabilities’ evaluation of data gathered from throughout the T&E as a Continuum (TEAAC), at wargames, experiments, exercises, test, M&S, and LVC events) generate the knowledge for informed decisions.

Figure 2: DSEF and TEAAC

The TEAAC concept combines Mission Engineering (ME), Systems Engineering (SE), and T&E into parallel, collaborative, and combined efforts, as illustrated in Figure 2. ME’s definition of Mission Threads (MT) and Mission Engineering Threads (MET) allow SE and T&E to develop a shared understanding of the operational and technical capabilities needed to ensure successful mission accomplishment. This combined approach to conducting ME, SE, and T&E sets the stage for early and consistent focus on evaluating mission-critical technical and operational capabilities to inform key decisions that will deliver critical warfighting capability at the “speed of relevance.”

The DSEF defines and aligns the decision space with the capabilities to be evaluated to inform the decisions, ultimately with the data to be collected to perform the evaluation. The “art” and critical thought in developing a DSEF is properly stating the decision space (i.e., What decision needs to be made? When does the decision need to be made? What is the essence of the information needed by the decision-maker to make an informed decision?). After the decision space, the operational and technical capabilities being considered or developed are defined. The definition of capabilities can come from the ME study’s MT/MET definition, technical specifications, requirements documents, architecture designs, or portfolio capability documentation. It’s never too early to think through the decisions and capabilities and define an early DSEF! Early in the continuum, if firm requirements have not yet been established, an ad-hoc approach of eliciting the capability definitions through a facilitated discussion with the operational planners, architects, or system engineers has worked well in the past. Finally, the capabilities to be evaluated are linked to the decision(s) they will inform by identifying data-providing TEAAC events (e.g., wargames, exercises, experiments, demonstrations, tests, M&S events).

Because developing the decision space is the most challenging part of the thought process, the next section discusses the decisions and questions and how they vary throughout the capability delivery continuum. Specifically, as a concept moves from early capability development planning, through technology development, prototyping and experimentation, and operational system-of-systems (SoS) architecture delivery.

The DSEF Decision Space

The DSEF’s decision space consists of the critical decision (or knowledge) points that will benefit from the knowledge gained by evaluating the concept, system, or architecture. The questions aligned with each decision point articulate the essence of the information the decision-maker needs to make an informed decision. The questions serve to focus the capability evaluation. In other words, the decision-makers’ questions identify which capabilities (or bins of capability e.g., performance, threat resilience, etc.) need to be evaluated to assist them in decision-making throughout the capability development cycle. This time-phased evaluation plan translates into data needs, used to develop the data collection plan. The data collection plan drives the conduct of wargames, experiments, exercises, demonstrations, tests, M&S events, etc. to gather the data needed for capability evaluation and decision-support.

The sections below describe the decision space that might accompany major segments of the capability development cycle. Specifically highlighted are Capability Development Planning (CDP), Science and Technology (S&T), Prototyping & Experimentation (P&E), and Operational System-of-Systems Enterprise Architectures phases of the cycle. Within each phase, the decision-makers face different types of decisions and thus require differing capability evaluations and data sources to inform their decisions. For example, the Capability Development Planner is charged with building a long-term plan that meets strategic end-states. The CDP must be informed by the evaluation of wargaming events to understand the concepts’ strategic outcomes. On the other hand, during exercises or experimentation of a prototype’s performance in a mission scenario (i.e., the P&E phase), decision-making will be focused on technical maturity, mission effectiveness, and the prototype’s adequacy in meeting requirements for it to transition into an acquisition program.

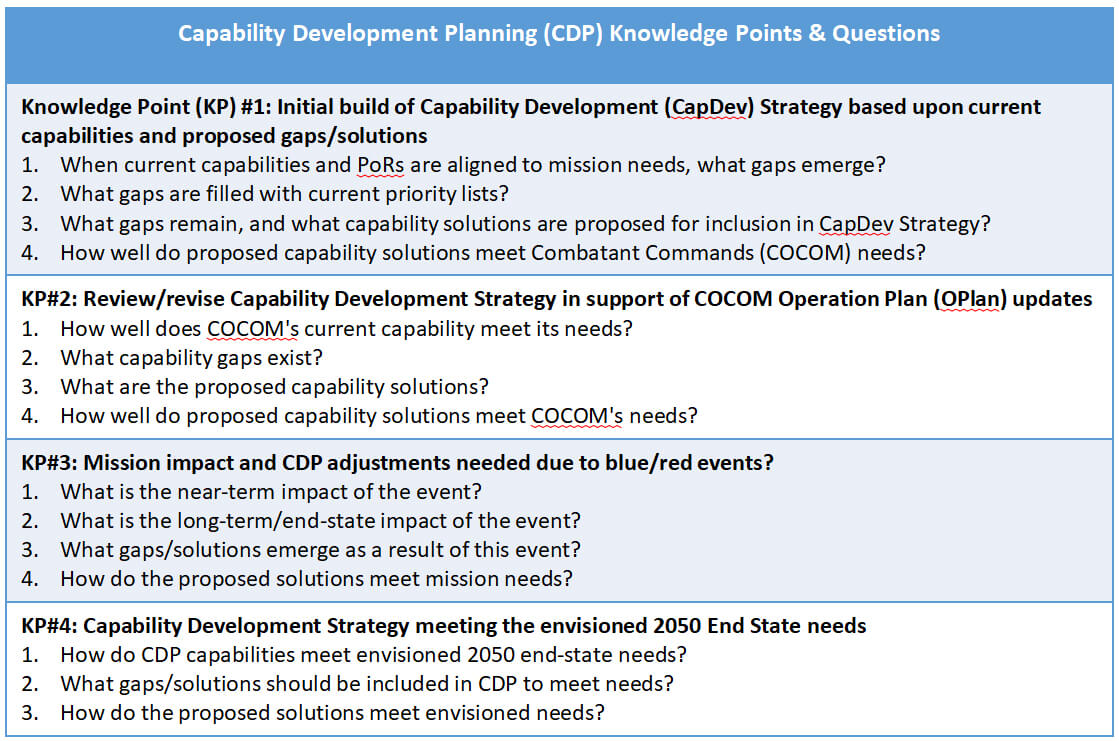

Capability Development Planning

Capability Development Planning (CDP) is the set of analytical and decision-making processes employed to anticipate, explore, and pursue Research and Development (R&D) opportunities, acquisitions, and strategic adaptations that will enable full support of the National Defense Strategy (NDS) well into the future[ Leftwich, James A., Debra Knopman, Jordan R. Fischbach, Michael J. D. Vermeer, Kristin Van Abel, Nidhi Kalra; Air Force Capability Development Planning, Analytical Methods to Support Investment Decisions; RAND Project Air Force RR2931, 15 May 2018. ]. During this early strategic planning process, the types of decisions are broad, and the data for capability evaluation likely come from Wargames. The Capability Development Planning DSEF articulates those decisions and questions and guides the wargame planning to collect the data needed to inform the R&D opportunities, acquisitions, and strategic adaptations.

Table 1 shows a notional set of DSEF decision space decisions and questions to support creating and updating a long-term capability development strategy.

Table 1 Capability Development Planning (CDP) DSEF Decision Space

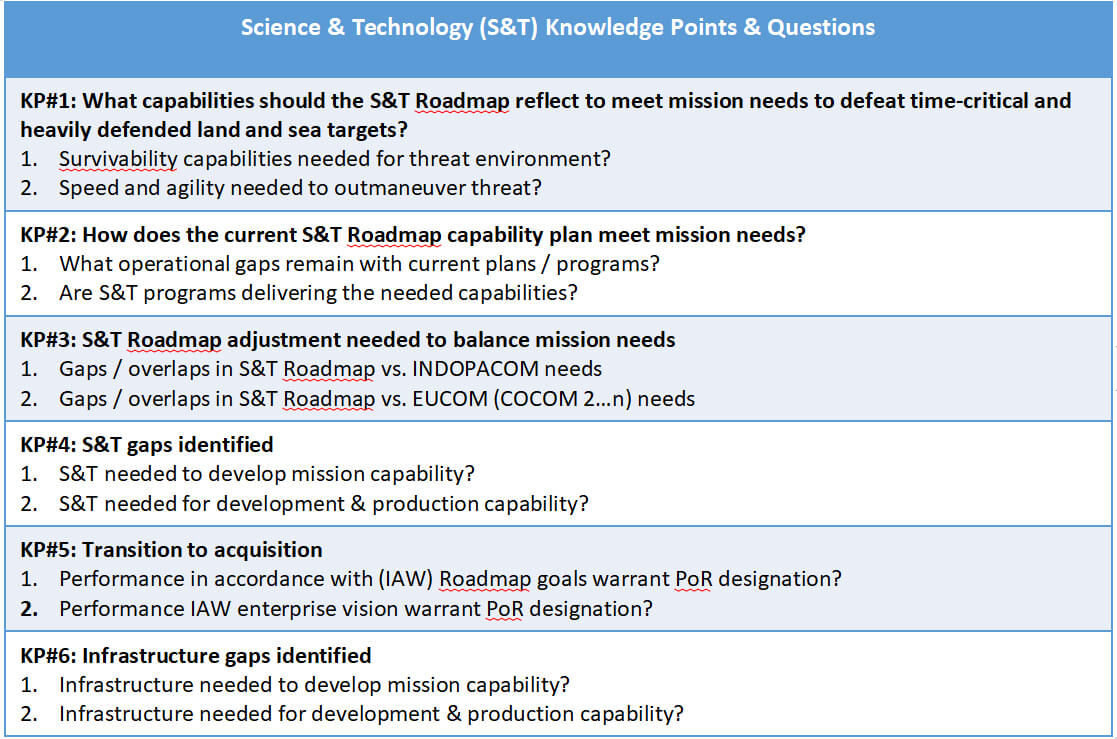

Science and Technology

The DSEF is tailored to inform Science and Technology (S&T) decision-making, such as investments, capabilities to be pursued to fill operational gaps, and technology readiness for transition to prototyping or acquisition. The data source used to evaluate technology is likely drawn from laboratory or early experimentation events. In addition to informing the transition of S&T into the acquisition process, an S&T-focused DSEF, in collaboration with the S&T community’s investment planning, can guide the S&T programs toward developing the mission-impacting technologies needed in the future fight. Table 2 illustrates a notional decision space to inform S&T roadmap development to support the theater commander’s mission needs.

Table 2 Science & Technology (S&T) DSEF Decision Space

Prototyping & Experimentation

Dr. Michael D. Griffin, then-Under Secretary of Defense for Research and Engineering (USD(R&E)), acknowledged challenges with the current pace at which the U.S. develops advanced warfighting capability in his April 18, 2018 testimony to the Senate Armed Services Committee’s Subcommittee on Emerging Threats and Capabilities:

“We are in constant competition, and the pace of that competition is increasing. In a world where everyone pretty much today has equal access to technology, innovation is important, and it will always be important, but speed becomes the differentiating factor. How quickly we can translate technology into fielded capability is where we can achieve and maintain our technological edge. It is not just about speed of discovery. It is about speed of delivery to the field.”

To address these challenges, the 2018 National Defense Strategy (NDS) emphasized adopting a risk-tolerant approach to capability development through the extensive use of prototyping and experimentation (P&E) to drive down technical and integration risk, validate designs, gain warfighter feedback, and better inform achievable and affordable requirements, with the ultimate goal of delivering capabilities to the Joint warfighter at the speed of relevance.

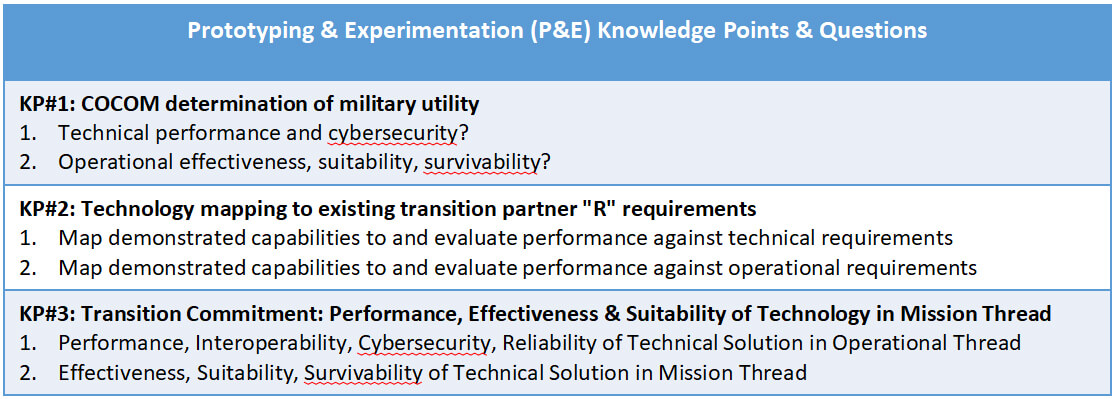

The prototyping and experimentation (P&E) phase of the capability development lifecycle has not typically used rigorous T&E methods. In addition to laboratory experiments, P&E frequently takes advantage of theater command exercises to evaluate a prototype’s ability to meet mission needs. While the theater exercises provide an operationally representative environment, their primary objective is not to collect data on individual systems (or prototypes) performance. Rather, the exercise objective is to provide a means for combatant commanders to maintain trained and ready forces, exercise contingency and theater security cooperation plans, and conduct joint and multinational training exercises.[ From: https://www.gao.gov/assets/gao-17-7-highlights.pdf] Further, the process of transitioning prototypes into acquisition programs of record or directly into operations is not well-defined. The DSEF can be a planning tool, bringing T&E best practices to address both issues. First, in defining the decision space of the P&E DSEF, the prototype developers will collaborate with the potential operational users and their acquisition representatives, thus understanding their needs and expectations for the successful transition of the prototype to operational use. Second, data needs from the theater exercises will be defined in planning the capability evaluation to inform prototype transition. Those data needs can be included in exercise planning to ensure they complement the exercise objectives, rather than interfere with them. Thus, transition decision-making informed by prototype evaluation while collaboratively guiding exercises with the DSEF can contribute to accelerating the delivery of effective operational capability to the field. Table 3 illustrates a notional P&E DSEF decision space.

Table 3 Prototyping & Experimentation (P&E) DSEF Decision Space

Operational System of System Enterprise Architecture or Integrated Portfolio Review

Acquisition and fielding decision-making for systems of systems, operational architectures, or mission-focused portfolios can be challenging from both a timing and capability evaluation perspective. Separate systems within the architecture or portfolio may be meeting requirements while others may lag in performance or schedule, making the decision-makers’ task difficult. The System-of-Systems DSEF can provide a high-level guide to mission-focused capability evaluation to inform development, acquisition, and operational decisions. In the case of the system-of-systems DSEF, structuring the capability space can be as much, or more, of a challenge than the decision space. The DSEF, in collaboration with System-of-Systems Mission Engineering, will help identify the mission-impacting capabilities to be evaluated.

One way to address the System-of-Systems challenge is to build a tiered or hierarchical DSEF that spans the Enterprise to individual system or component levels. The Enterprise DSEF decision space focuses on overarching mission objectives or ME’s mission threads with decisions and questions focused on operational and technical capability acceptability at key capability delivery and fielding points. For example, the decisions may focus on informing capability development and integration, time-phasing of the architecture implementation, and aligning operational and technical capability delivery with mission needs.

The hierarchical approach of an Enterprise DSEF flowing to the Component DSEF will align expectations from enterprise capability acquisition strategy plans and mission needs with component capability development. The Component DSEF will inform the component’s capability release and operational acceptance decisions while also flowing information up and back to the Enterprise DSEF to inform how well the component is performing as a part of the enterprise.

The definition of operational and technical capabilities or the DSEF’s “Capability Space” should be built using either ME’s mission threads and mission engineering threads linkage or performing a mission decomposition to technical capabilities. The capability definition can be organized to make the most sense for the System-of-Systems or architecture. For example, the definition could link User Features and Operational Workflows (operational capabilities) with System Functions (technical capabilities) regardless of the systems providing those functions. Another means could be to define the operational and technical capabilities by system. Again, this is meant to be a thought process and a framework for capturing that thought process, not a dictated format.

Summary

The Decision Support Evaluation Framework (DSEF) accelerates capabilities to warfighters by informing decisions throughout the capability development and delivery continuum, with knowledge gained from operational and technical capability evaluation, fueled by data collected from events conducted throughout the test continuum (a.k.a. TEAAC).

The DSEF provides a standard process to guide TEAAC data collection and use from events such as early wargames, experiments, exercises, live, virtual, constructive (LVC) tests, and M&S events. The data used to evaluate operational and technical capabilities ultimately inform decisions and knowledge points across the full capability development continuum. While the process of defining decision points, knowledge needs, operational and technical capabilities, and data sources is standard, the nature of the decisions and knowledge needs change throughout the capability development continuum.

This paper illustrates notional DSEF decision spaces for the major segments of the capability development continuum. Notional because the examples serve as a starting point for tailoring the decisions and capability focus questions to the situation’s specifics. Further, the DSEF’s decision-evaluation-data source definition process should be considered for use by any capability developer in any situation where decision-making and product delivery can be improved with knowledge gained from capability evaluation.

References

Collins, Christopher and Kenneth Senechal; Test and Evaluation as a Continuum; The Journal of Test & Evaluation, March 2023, Volume 44, Issue 1; Available online at: https://itea.org/journals/volume-44-1/test-and-evaluation-as-a-continuum/

Ibid, Collins and Senechal

Leftwich, James A., Debra Knopman, Jordan R. Fischbach, Michael J. D. Vermeer, Kristin Van Abel, Nidhi Kalra; Air Force Capability Development Planning, Analytical Methods to Support Investment Decisions; RAND Project Air Force RR2931, 15 May 2018.

Accelerating New Technologies to Meet Emerging Threats: Testimony before the U.S. Senate Subcommittee on

Emerging Threats and Capabilities of the Committee on Armed Services, 115th Cong. (2018) (testimony of Michael

D. Griffin, Under Secretary of Defense for Research and Engineering (USD(R&E)), 7-8, https://www.armedservices.senate.gov/imo/media/doc/18-40_04-18-18.pdf.

Department of Defense Prototyping Guidebook; OUSD(R&E)/P&E; November 2019 (Version 2.0) From: https://www.gao.gov/assets/gao-17-7-highlights.pdf

.

Author Biographies

Dr. Suzanne Beers is MITRE’s Defense Systems Engineering Department Manager, leading personnel and portfolio management of six OSD-level test and evaluation (T&E), systems engineering and strategic analysis projects. In addition to her Department Manager responsibilities, Suzanne is the Technical Lead for OSD’s Decision Support Evaluation Framework initiative. This work includes evolving the decision-evaluation-test concept from separate developmental and operational evaluation frameworks and applying it to both acquisition programs of record and full-spectrum (S&T to sustainment – especially to prototyping and experimentation) decision-support informed by operational and technical capability evaluation.

Suzanne joined MITRE in Colorado Springs after retiring from the USAF in October 2008. Her final assignment was as the Commander of Air Force Operational Test and Evaluation Center’s Detachment 4 at Peterson AFB, leading the OT&E of space, missile, and missile defense systems.

Dr. Beers holds five degrees from a wide variety of institutions of higher learning…giving her plenty of cheering flexibility during college football season…including a B.S. in Mechanical Engineering from The Ohio State University and a Ph.D. in Electrical Engineering from Georgia Institute of Technology. Suzanne caught the triathlon bug, spending the last decade swimming, biking, running or talking triathlon with fellow enthusiasts. She has since traded-in the triathlon hobby for astrophotography. She’d be happy to entertain any triathlon or astrophotography questions, in addition to those related to this article!

- Join us on LinkedIn to stay updated with the latest industry insights, valuable content, and professional networking!