MARCH 2024 I Volume 45, Issue 1

Transforming the Testing and Evaluation of Autonomous Multi-Agent Systems: Introducing In-Situ Testing via Distributed Ledger Technology

MARCH 2024

Volume 45 I Issue 1

IN THIS JOURNAL:

- Issue at a Glance

- Chairman’s Message

Book Reviews

- Book Review of Systems Engineering for the Digital Age: Practitioner Perspectives

Technical Articles

- Towards Multi-Fidelity Test and Evaluation of Artificial Intelligence and Machine Learning-Based Systems

- Developing AI Trust: From Theory to Testing and the Myths in Between

- Digital Twin: A Quick Overview

- Transforming the Testing and Evaluation of Autonomous Multi-Agent Systems: Introducing In-Situ Testing via Distributed Ledger Technology

- Decision Supporting Capability Evaluation throughout the Capability Development Lifecycle

Workforce of the Future

- Developing Robot Morphology to Survive an Aerial Drop

News

- Association News

- Chapter News

- Corporate Member News

![]()

Transforming the Testing and Evaluation of Autonomous Multi-Agent Systems: Introducing In-Situ Testing via Distributed Ledger Technology

Mr. Stuart Harshbarger

Associate Technical Director, Research Directorate National Security Agency

![]()

![]()

Michael Collins

Technical Director NSA Visiting Professors National Security Agency

![]()

Abstract

This paper explores the potential of integrating consensus enabled distributed ledger technology (C-DLT) for in-situ testing of complex adaptive AI-enabled systems (CA2IS). As autonomous multi-agent systems (AMAS) evolve, the underlying statistical basis of AI/ML-enabled systems necessitate the adoption of novel approaches to system Test and Evaluation (T&E). C-DLT enabled in-situ testing aims to capture real-world context and emergent behaviors in these systems within a global frame of reference model. Additionally, the proposed method provides for periodic testing of operational AI/ML-based systems, emphasizing the dynamic nature of collaborative Continuous Learning Systems (CLS) as they adapt to new training data, new operational environments and changing environmental conditions. The performance trade-offs that arise within this vision of collaborative CLS underscore the necessity for the proposed C-DLT enabled in-situ testing method.

Keywords: Test and Evaluation, Artificial Intelligence, Autonomous Multi-Agent Systems, Adaptive Systems Collaborative Learning, Continuous Learning

Abbreviations:

AI Artificial Intelligence

AMAS Autonomous multi-agent systems

C-DLT Consensus enabled distributed ledger technology

CA2IS Complex adaptive AI-enabled systems

ML Machine learning

T&E Text and evaluation

XAI Explainable AI

Introduction

Autonomous multi-agent systems (AMAS) and more recently complex adaptive AI-enabled systems (CA2IS) have become increasingly crucial in various fields, such as dynamic warfighting environments (De Lima, 2022), humanitarian assistance and disaster response search and rescue operations (Feraru, 2020; Drew, 2021), agriculture & farming (Delavarpour, 2021), and critical infrastructure applications such as traffic management and energy distribution ( Buzachis, 2020) . These complex systems may consist of multiple interconnected components, known as agents, that collaborate to achieve specific objectives. These agents are often heterogeneous, possessing different capabilities, roles, or behaviors, which add a layer of complexity to the system. For instance, a coordinated team of drones, ground robots, and rescue personnel can work together to assess damage and coordinate relief efforts in the aftermath of natural disasters. In future urban settings, CA2IS can optimize traffic flow by coordinating the actions of autonomous vehicles, traffic lights, and road sensors.

A key feature of CA2IS is their ability to adapt, learn, and make decisions autonomously. They often employ AI/ML-enabled distributed sensing systems, which may rely on probabilistic algorithms within individual agents or on the fusion of multiple sensing modalities within an agent or across multiple collaborative agents through statistically based AI/ML algorithms (Chen, 2022; Jiang, 2020). They rely on embedded algorithms to make predictions or decisions without being explicitly programmed to perform the task. For example, such systems likely involve numerous probabilistic agents that can efficiently, effectively, and dynamically control the agent’s autonomous behaviors for relative position, navigation, and timing. Fundamental challenges in achieving increasing levels of coordinated control, and/or collaborative sensing behaviors are due to the inherent variability in each individual agent’s performance and the complex system-level implications of variable communications, networking latency, and differences in precision between individual agents. These uncertainties, coupled with the challenges of accurately recreating real-world environments and repeating experiments under sufficiently similar conditions, make testing and evaluating CA2IS particularly challenging.

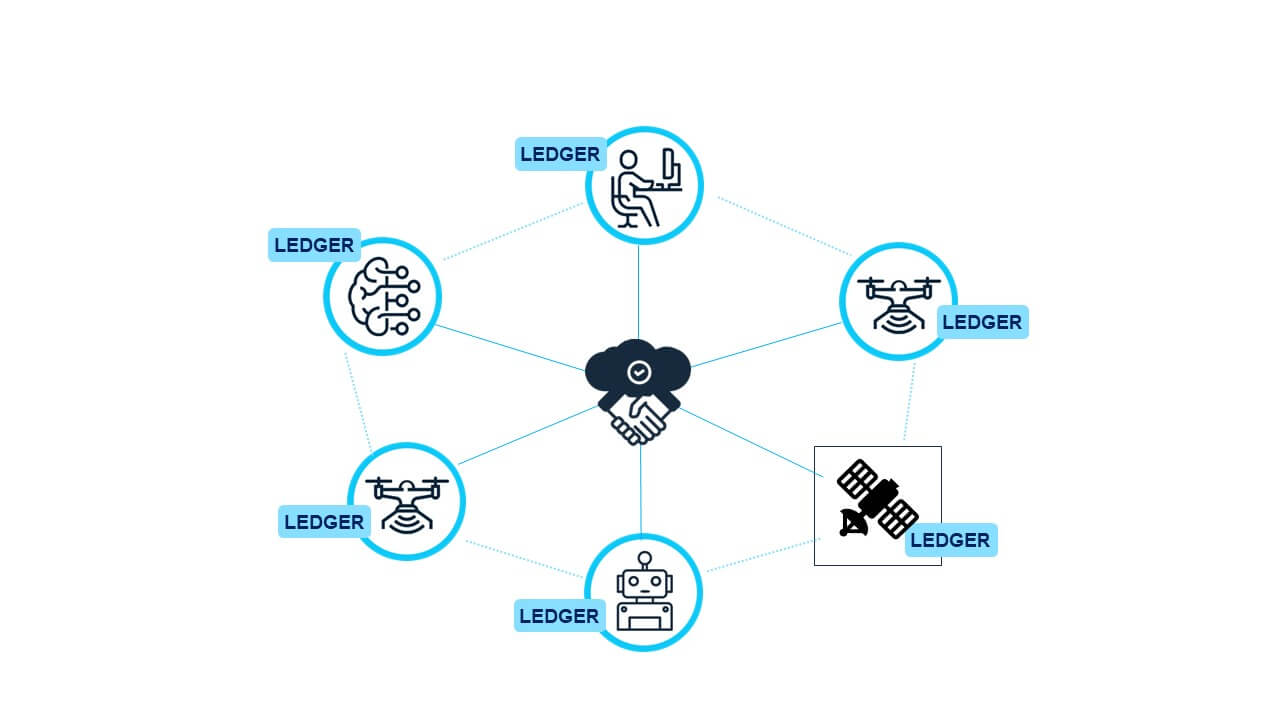

To address the diverse nature, unpredictability, and adaptability of CA2IS, we introduce the concept of using consensus-capable distributed ledger technology (C-DLT). C-DLT is a decentralized technology that allows multiple participants to share and update information across a network without relying on a central authority (Gorbunova, 2022). C-DLT can provide inherent ordering of the network interactions and transactions, in addition to consensus on ledger entries across the distributed network. These features underlie the potential of C-DLT to enable an effective framework for in-situ testing (i.e., testing while operating) of CA2IS.

In this paper, we examine the potential of C-DLT for solving many of the challenges of testing and evaluating CA2IS. It explores the integration of a C-DLT structure with embedded system parameters (such as individual agent pose, global frame of reference location, AI/ML model performance, measures of onboard processor timing variability, etc…) as an enabling technology and potential architectural solution. This concept’s significance lies in its potential to enhance the testing and evaluation of CA2IS at the system (and mission) level for operational behaviors resulting from interactions between the individual agents involved, interactions with users/operators, and interactions with the physical environment in which repeatability is difficult to ensure. Such analysis is particularly relevant and necessary for systems supporting national defense, intelligence collection, or potential weapons system applications. This approach aims to enhance system performance, reliability, safety, and resilience in real-world scenarios through improved the testing & evaluation provisions cited and by extension to CA2IS architectural considerations (National Science and Technology Council, 2023). This not only benefits the aforementioned areas but also extends to a wide range of industries and domains. For instance, sectors like healthcare, transportation, and critical infrastructure could greatly benefit from this testing framework.

Background of current CA2IS Testing

In traditional C2AIS testing methods, one establishes a set of requirements and develops test parameters to exercise the system across a representative set of operating conditions. One then conducts repetitive testing to ensure the system meets specified requirements and that the system performs as expected across the operating variables under test. During this process, a report detailing the system’s behavior is generated based on characterization of performance and quantification of variation from expected results. However, even when dealing with a single, smart element that uses AI/ML as part of a complex system, the performance becomes probabilistic in nature and may be highly variable, depending on the fit of the corresponding model’s training data and how well that data reflects operational conditions. Factors such as time of day, season, and environmental changes can affect subsystem outputs, making it impossible to achieve repeatable outcomes consistently, even though results may still be statistically representative of a particular operational instantiation. Repeating the test under the exact same background noise, environmental conditions, and timing and latency issues is not feasible given the spectrum of variables involved.

Such difficulty stems from the numerous variables and conditions present in real-world settings that are hard to replicate in controlled or simulated environments. Factors like shadows, foliage, weather, and other environmental conditions can impact the output (in terms of accuracy, confidence, and reliability) of the sensing or control algorithms used in CA2IS, which are inherently statistically based and depend ing upon representative training data that closely matches the target application (and therefore the test conditions). As scale (number of collaborating agents) and complexity of tasks and systems increases, it not only becomes increasingly difficult (impossible?) to identify and test all possible scenarios, but it also becomes unrealistic to expect that individual test scenarios can be repeated with the same quantitative results. Development of a traditional test plan through design of representative experimental scenarios for repetitive test and evaluation is not only increasingly challenging, but the ability to also validate performance through repeatable outcomes is no longer a viable testing paradigm (Ahner, 2018; Mitola, 2023; Hobson, 2023; Lanus; 2021).

Current methods of fusing information from multiple sensing platforms often face challenges due to the ambiguities and uncertainties arising from the varying frames of reference among different agents and sensors. The expansion from a single unit to multiple collaborative units introduces further uncertainties. These arise from performance of individual machine learning algorithms, relative positioning, and timing variabilities due to communications latency and data exchange. Details such as command and control ordering sequence and embedded processing order further complicate matters. The use of different machine learning algorithms on each platform also adds to the complexity. These environmental, temporal, and positional uncertainties can lead to more complex system performance variability (Quan, 2023).

The Cynefin framework is a current decision-making model that provides a typology of complexity contexts. This framework enhances the understanding that these systems exist in the complex domain, where cause-and-effect relationships are only understood in retrospect due to the unpredictable and emergent nature of the system. Testing methods which are effective in the Cynefin framework struggle to address the complexity of CA2IS. When expanding from a single unit to a set of collaborative units, uncertainties from individual machine learning algorithms are compounded by uncertainties introduced by relative position and temporal variabilities due to latency and information exchange. The differences in machine learning algorithms on each individual platform being fused together also contribute to the complexity (Kurtz, 2003).

This complexity brings several challenges to current testing and evaluation methods. A significant challenge is developing a representative set of scenarios for individual agents and systems of agents. Traditional testing methods are not able to address all possible operational scenarios and can only test pre-identified cases of interest. This leaves unanticipated or overly complex scenarios untested.

Given these limitations, other testing methods have been explored. For instance, standoff testing, using remote measurements and telemetry for observing system behavior from a distance, may not provide detailed insight into the underlying reasons for the system’s actions such as intra-agent processing and localized timing of inter-agent command and control messaging or data exchange. This lack of insight complicates the evaluation process. Also, modeling and simulation techniques, although incorporating randomness, may overlook critical edge cases due to their low probability of occurrence in the modeled space (Ahner et al., 2018; Haugh et al., 2018; Porter, 2020; Tate & Sparrow, 2018). AI-enabled systems often lack transparency, complicating the testing process as evaluators struggle to understand the system’s decision-making rationale (Porter et al., 2020). Research around explainable AI (XAI) aims to make the decision-making processes of AI systems more interpretable and understandable. By providing clear explanations of AI decisions, XAI may help evaluators better understand the system’s behavior, thereby improving the testing and evaluation process (de Bruijn, 2022). However, these insights may better explain the “why” behind the behavior and not necessarily the detail of “how” and may not fully address timing and precision considerations. These limitations highlight the need for better understanding and innovative testing approaches, beyond the use of XAI alone. C-DLT methods provide enhanced instrumentation capabilities and ledger entries (logs) to better capture the complexities of CA2IS interactions for post-test (event) analysis. Additionally, the C-DLT methods provide a mechanism for real-time monitoring of behaviors and for anomaly detection during operations to ensure CA2IS safety, reliability, and effectiveness in real-world applications.

When testing CA2IS, understanding the perceptions and decision-making processes of agents involved is crucial, as these processes can influence agent interactions and overall emergent behaviors (Arnold, et al, 2018; Haugh et al., 2018; Tolk, 2019). This understanding enables testers to see how agents interpret their environment, process incoming data, and make decisions, helping to ensure system stability and performance by identifying and mitigating unintended or undesirable emergent behaviors. To fully comprehend these evolving systems’ decision-making processes, it is vital to capture both the initial state or conditions and the subsequent changes of the agent, as well as the environment, command and control sequences, processing order/timing variations and other parameters that trigger or affect specific behavior. This necessitates access to the broad spectrum of data used in the decision-making process, as well as information about the state of sensors, environmental conditions, executed algorithms, and other relevant factors for all agents involved. This comprehensive view allows for a holistic understanding of the system’s behavior and the contributing factors (Arnold, et al, 2018; Haugh et al., 2018; Tolk, 2019). Ideally, we encourage a fully distributed test and evaluation architecture where each unit under test has a complete record of the inter-agent command and control messages, relative pose and position data, internal status and process indicators, local and global time references and consensus values for common parameters of interest.

Capturing, managing, and evaluating data in CA2IS is also challenging due to the involvement of different organizations, each with their own configurations and standards, as well as working with different authorities and/or data access permissions. These interoperability challenges and varying levels of transparency make it difficult to identify and address issues in the testing and evaluation processes between multiple organizations or partners.

Current physical test ranges may lack the necessary instrumentation or capabilities to fully replicate or analyze observed system behaviors, given the complexity and adaptability of these systems. Digital testbeds, which offer controlled environments for testing and evaluation, may not exist, or have limited capacity to handle the extensive state-spaces necessary to gain representative insight to onboard processes, and may not have capacity to record or log the intricate interactions characteristic of these systems. These factors contribute to the complexity of testing and evaluating CA2IS, emphasizing the need for innovative testing approaches.

One last area of consideration involves Test & Evaluation over a system’s lifespan (lifecycle). Historically, system updates have required incremental representative integration testing in a corresponding System Integration Laboratory (SIL) and perhaps a return to the test range depending on the scope of change. The technology update interval for CA2IS is much more rapid than previous systems. First, we have introduced that the AI/ML-models used in CA2IS may continuously learn and adapt. We anticipate that individual collaborative agents may evolve and receive updates on different schedules. For example, early versions of these systems may not be encrypted or monitored for cybersecurity. As the system progresses through developmental testing or as new threats emerge over time, some agents may adopt encryption or cybersecurity measures while others do not. Such discrepancies can further affect the synchronization of crucial data, adding another layer of complexity to the testing process. We envision a continuous testing regime where C-DLT capabilities allow for periodic analysis of CA2IS data for ensuring operational integrity as the various system components are updated asynchronously across the CA2IS lifecycle.

Background in current In-Situ Testing

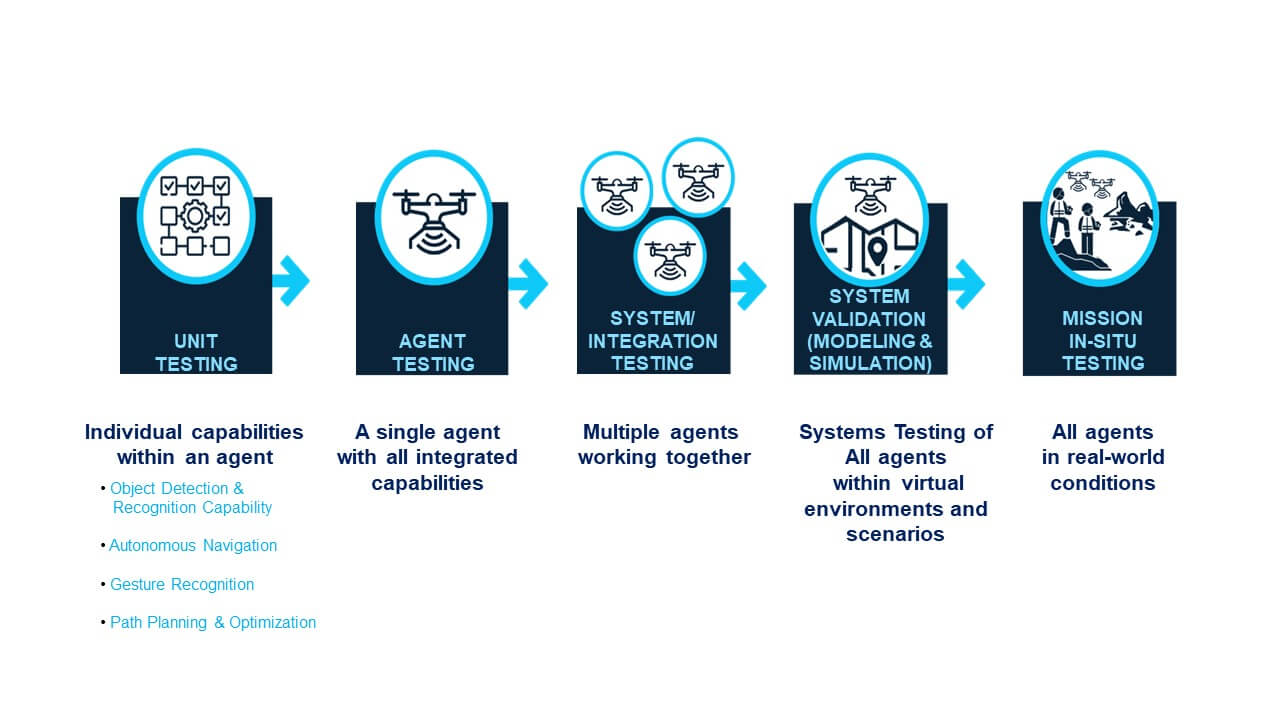

Testing autonomous systems becomes increasingly complex as we move from unit testing of individual capabilities within a single agent to testing multiple collaborating agents in a real-world environment. The initial stage of unit testing focuses on verifying the functionality of each capability in isolation. Examples include testing object detection, autonomous navigation, or path planning. These capabilities are then integrated into an agent (individual platform). As we progress from testing singular capabilities to single agent systems, and then multiple-agents or systems of systems, the focus shifts to evaluating the interactions and integration of these capabilities. Figure 1 below depicts an example testing process flow.

Figure 1 Testing Phases

Real-world testing of collaborating agents adds another layer of complexity, as the agents must adapt to dynamic and unpredictable conditions, such as varying environmental factors, obstacles, and uncertainties. This stage requires a thorough understanding of how agents influence each other’s behavior and the emergent properties that arise from their interactions. Robust testing methodologies are crucial for effectively capturing and managing the vast amount of data generated during these tests, and for accurately evaluating the system’s performance, adaptability, and effectiveness in addressing real-world challenges.In-situ testing is a method for evaluating components or systems within their intended operational environment, allowing researchers and engineers to observe and analyze system behavior, including interactions with operators/users under real-world conditions. In the context of CA2IS, in-situ testing becomes particularly important due to the complex and adaptive nature of these systems. As the agents must adapt to dynamic conditions and interact with each other, in-situ testing allows for a more accurate evaluation of their performance, adaptability, and effectiveness in addressing real-world challenges.

The C-DLT plays a crucial role in in-situ testing of CA2IS. It serves as a tool for recording and reconciling both internal and external agent parameters. Combined with dynamic analysis, it enables the capture and analysis of data related to the environment, sensors, individual agent interactions, decision-making processes, and emergent system behaviors in real-time. The ledger information can be monitored in real time for testing purposes. It can also be preserved as a record for post-event analysis and performance confirmation.

Data Collection and Management Challenges in Systems Testing

Testing these systems involves capturing both the initial state or conditions and the subsequent changes or command and control messaging that trigger specific behaviors. This requires access to key data and parameters associated with the decision-making process, as well as information about the individual sensors’ or agents’ state, environmental conditions, executed algorithms, and other relevant factors (relative positions, status, etc.) for all agents involved at the appropriate point in time. Collecting, managing, and correlating the vast and varied data inputs from diverse conditions and numerous sensors is a challenge.

Several testing methods and tools are available to support test and evaluation for CA2IS, such as the publish/subscribe method, centralized data collection methods, and standoff testing. These methods employ varied techniques to gather and assess data from agents and sensors. The publish/subscribe method is a messaging pattern used in distributed systems, including CA2IS. Agents generate data and send it to a message broker, which then distributes the data to other agents interested in receiving specific types of data. This method allows for efficient communication and data exchange among multiple agents without requiring direct connections between them. However, it does not inherently provide a mechanism for synchronizing data across multiple agents and sensors, nor does it guarantee that data will be delivered in the order it was used or generated. (Baldoni, 2012; Happ, 2016). Addressing this gap is a key aspect of the consensus capable DLT proposed in this approach.

Centralized data collection methods simplify data management, analysis, and evaluation by consolidating data from multiple agents and sensors in a central repository. However, this approach may not accurately record the exact ordering of events in large-scale systems and can introduce bottlenecks and single points of failure. Standoff testing, on the other hand, non-intrusively observes and evaluates autonomous multi-agent systems (CA2IS) from a distance but has limitations, including limited data access, lack of real-time synchronization, potential data inconsistency, limited scalability, and inability to track data provenance. Neither of these methods provide for recording or logging internal status, algorithm confidence scores, sequencing of internal processes relative to system command and control communications, etc.

Additionally, regardless of the testing method used, achieving a close proximity of fit to a global reference model is essential for evaluating how well the system conforms to established standards and expectations. We propose an enhanced global reference model as a (future) standardized framework supported by guidelines and benchmarks for understanding, comparing, and evaluating various aspects of a system, such as performance, reliability, and interoperability with consideration to relative position of individual agents to target, individual agent algorithm confidence, estimates of pose/position error, considerations of temporal uncertainty, and the like.

The proximity of fit refers to the degree of alignment between observed system behaviors and the expectations or parameters defined by the global reference model. It is crucial because it indicates how well the system’s behavior, including agent interactions and decision-making processes, adheres to the standards and expectations set by the model (Reese, 2018).

To accurately assess the proximity of fit, it is necessary to record all command communication and processing decision inputs at each individual agent, as well as understand the relative proximity of each agent with respect to each other at all points in time, adjusted by the estimate of time that each individual agent has. Synchronization plays a vital role in ensuring that data originating from different agents, sensors, and models maintain consistency and alignment concerning timing and event order. Without proper synchronization, it becomes challenging to accurately correlate data and analyze interactions among agents and their decision-making processes, ultimately affecting the proximity of fit to the global reference model.

Integrating this data into a global reference model remains a challenge as it requires effective synchronization and data fusion techniques to accurately represent the system’s behavior and interactions in real-world scenarios.

C-DLT Support of In-Situ Testing

C-DLT Support of In-Site Testing

In response to the challenges discussed, we propose the use of consensus capable distributed ledger technology (C-DLT), such as Hashgraph or similar technologies. These technologies have been shown to provide low-overhead, time sequence ordering, and consensus for distributed ledger entries across the system (Kordy, 2014). C-DLT is a decentralized approach that enables multiple agents (participants) to share and update information across a network without relying on a central authority. This addresses some of the challenges associated with observing and analyzing CA2IS agents from a distance and over the lifecycle of the system.

Key features of C-DLT include immutability and transparency, with extensions to cryptographic security, data provisioning, and real-time performance monitoring. Consensus mechanisms, an essential aspect of DLT, facilitate agreement among participants regarding the state of the ledger. This ensures data consistency and synchronization across the network, addressing the challenges of data management and synchronization in CA2IS testing.

Ch-DLT Support of Data Synchronization

Data Synchronization is a crucial aspect of testing CA2IS. C-DLT captures real-time transactions involving autonomous agents, sensors, and models from various perspectives. This includes individual sender and receiver states, relative orientation, and subsequent actions at specific points in time. Synchronization of this data is critical as it ensures consistency and alignment concerning timing and event order across different agents, sensors, and models.

Proper synchronization allows for accurate correlation of data and comprehensive analysis of interactions among agents and their decision-making processes. This, in turn, enables a thorough review of the agents’ perceptions, decision processes, and the underlying data guiding their actions. The ultimate result is an accurate assessment of the system’s proximity of fit to the global reference model.

The consensus mechanism in DLT plays a crucial role in data synchronization. It ensures all nodes within the network agree on the chronological sequence of events and the state of the ledger. This mechanism enables effective synchronization of data across multiple agents and sensors, ensuring all components of the multi-agent system have a consistent view of the environment and each other’s actions.”

Figure 2: Consensus on Data Value

Collaboration

Collaboration is a key aspect for both the function and testing of CA2IS, and C-DLT can significantly enhance this process. C-DLT’s secure and transparent data sharing capabilities provide a single source of truth for all stakeholders, ensuring the security and integrity of the data. This feature is particularly beneficial in facilitating collaboration in testing CA2IS, as an appropriately defined ledger format can alleviate challenges related to interoperability and coordination among heterogeneous agents. Our proposed use of C-DLT enables the seamless integration of diverse agents into a unified testing environment. It includes provisions for registering agents with specific data access permissions and authorities for access control and compliance considerations. This approach fosters collaboration among researchers, allowing them to jointly evaluate and improve the performance of their agents within a shared testing environment that complies with established policies.

Hypothetical Example of C-DLT in Testing

This section presents a hypothetical scenario to illustrate how the proposed C-DLT could be used in testing and evaluation (T&E) of autonomous agents. While this scenario is hypothetical, it is designed to reflect plausible real-world operations and challenges that such systems might encounter and builds upon and extends ongoing collaborative work with the US Military West Point, the US Naval Academy, the US Airforce Academy, Vanderbilt University, and others to investigate the feasibility of the proposed C-DLT approach (Koutsoukos, 2023).In this extended scenario, missing hikers need to be found in a remote canyon. Search and rescue teams, including ground-based, aerial, and water units from various agencies, are deployed, each equipped with sensors and autonomous agents. These agents have specific roles, such as navigating rough terrain, conducting aerial reconnaissance, or navigating water bodies. They work together, share information, and make decisions in real time to locate the missing hikers and coordinate rescue efforts.

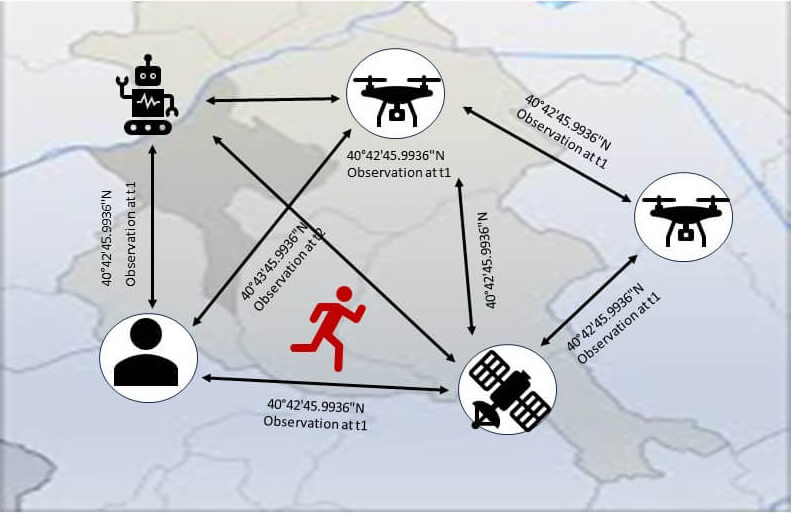

In this scenario, as the agents are deployed, evaluators can initiate the real-time testing process, monitoring the autonomous agents and sensors, developed by different organizations, as they start scanning various areas of the forest. Throughout the operation, individual agents can continuously collect and log data from their sensors, environment, and interactions with other agents, to include the expanded parameters representing pose, position, timing, and AI/ML model versioning/scoring, recording it on the distributed ledger. This data is made available to all evaluators (within their data access privileges and constraints) in real time or could be downloaded for more in-depth post-event analysis. The C-DLT, using a consensus algorithm, can ensure the data synchronization in the sequence it is received, maintaining consistency across all agents. The expanded frame of reference parameters allows for post-analysis mitigation of relative position and timing uncertainties. This could be achieved as practically as possible through the interpolation of pose data using motion/path constraints combined with timing adjustments to offset system latency.

As the agents collect data from their sensors, environment, and interactions with other agents, evaluators can track the performance of individual agents and the overall system, identifying potential bottlenecks, inefficiencies, or communication issues. Evaluators can analyze the decision-making process and the factors considered by the agents, ensuring that the algorithm is functioning as intended and leading to optimal search strategies. In addition, the C-DLT can be used to evaluate the interoperability of the agents developed by different organizations. By providing a standardized platform for communication and data exchange, the C-DLT enables seamless integration of diverse agents into a unified testing environment. Evaluators can assess how well the agents from various organizations work together, share information, and coordinate their actions, identifying any compatibility issues or areas for improvement.

During the search operation, the agents need to decide which areas to search next. Each agent might have a different opinion on which areas have the highest probability of where the hiker might be located. To make a collective decision, they may then use a higher-level mission prioritization algorithm that considers the inputs of all agents. After evaluating the available information, this algorithm determines that searching the area near a river has the highest priority. This directive is shared among the agents, and all digital maps are updated to reflect the agreed-upon search priority.

Figure 3: Achieving Consensus Among Agents

Evaluators can closely monitor the agents’ actions, communications, and decision-making processes as they occur in real time. The evaluators can observe how agents share information, such as the discovery of a piece of clothing, and how this information affects the agents’ behavior and search strategies. As the search operation continues, evaluators can continuously assess the performance of individual agents and the overall system. They evaluate the agents’ ability to navigate rough terrain, conduct aerial reconnaissance, or navigate water bodies, as well as the agents’ collaboration and coordination in the search and rescue mission, identifying any potential issues or bottlenecks in real time, such as communication delays, sensor malfunctions, or suboptimal decision-making.

Suppose an issue arises during the search operation: one of the aerial agents suddenly stops communicating with the rest of the team, causing a gap in the search coverage, and hindering the overall progress. The evaluators, who have been monitoring the system’s behavior and interactions through the distributed ledger, quickly notice the communication breakdown. They use the collected data in the C-DLT to investigate the problem.

By analyzing the data, evaluators determine that the aerial agent experienced a sensor malfunction, causing it to lose its position and communication capabilities. They also identify that the malfunction occurred shortly after the agent encountered a dense foliage area, which might have interfered with its sensors. With this information, evaluators can recommend adjustments to the agent’s sensor configuration or develop strategies to avoid similar issues in the future.

Evaluators can use the C-DLT data to assess the impact of the communication breakdown on the overall search operation. They can analyze how the other agents adapted their search strategies to compensate for the missing aerial agent and determine if any critical areas were left unsearched. Based on this analysis, evaluators can suggest improvements to the CA2IS’s adaptability and resilience in the face of unexpected issues, ensuring a more robust and efficient system capable of effectively operating in complex, real-world scenarios.

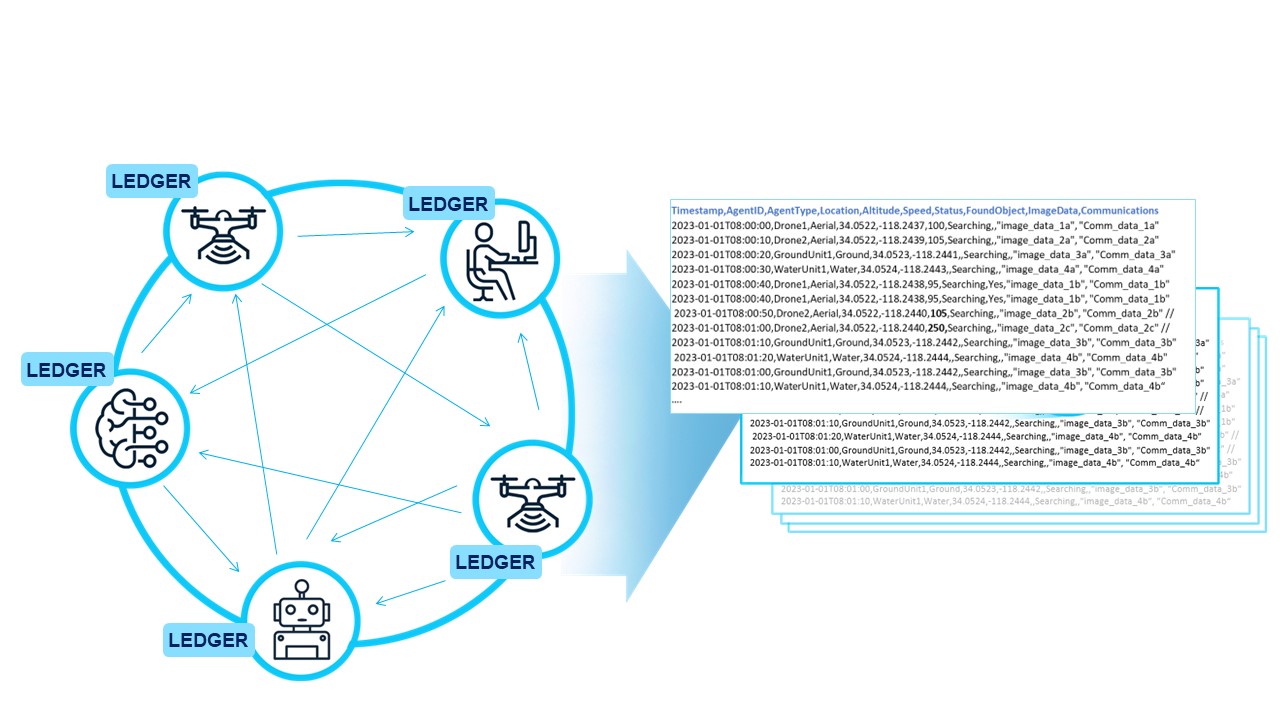

Enhancing T&E Using Ledger Data

The expanded frame of reference (pose) data and system parameters stored in the distributed ledger can be downloaded for post-processing analysis. This allows for the mitigation of temporal and position uncertainties introduced by system communications latency and other factors. The proposed in-situ test and evaluation model provides robust data logging and recording for subsequent off-line analysis. This means that each test event potentially becomes a source of new training data. This continual influx of real-world data can be used to improve system performance and potentially expand system capabilities over time.Additionally, the recorded data and new insights gained from analysis can be incorporated into system modeling and simulation scenarios. This can enhance the realism, accuracy, and effectiveness of future tests, ensuring that the testing process continually adapts and improves in line with the system it is evaluating.

Figure 4: All Data is Captured in the DLT

Using AI Methods to Understand Agent Perspectives and Behaviors

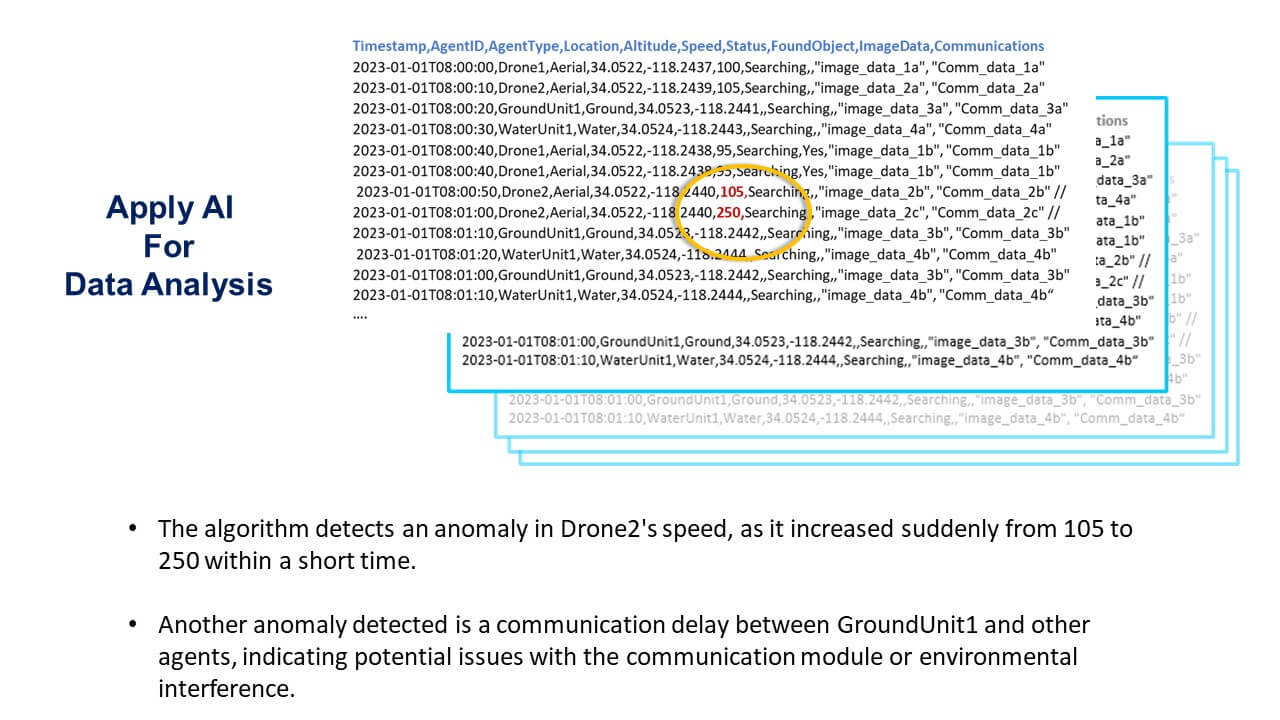

Applying AI methods to understand agent perspectives and behaviors can significantly enhance the analysis of data stored in the C-DLT. The transactions recorded in the C-DLT offer a granular depiction of various scenarios in real-world environments. The exact order of events is crucial for understanding agent perspectives and behaviors in multi-agent systems. It helps establish causality, identify temporal dependencies, resolve conflicts, analyze performance, detect anomalies, and track learning and adaptation processes.” The C-DLT’s recorded transactions offer a granular depiction of various scenarios in real-world environments, providing an accurate depiction of various real-world scenarios as they unfold. The exact order of events is crucial for understanding agent perspectives and behaviors in multi-agent systems, as it helps establish causality, identify temporal dependencies, resolve conflicts, analyze performance, detect anomalies, and track learning and adaptation processes. Knowing the sequence of events allows researchers to determine causal relationships, understand how agents adapt their strategies based on context, resolve conflicts over resources, optimize system performance, and monitor the learning process.By analyzing the data stored in the DLT, evaluators can apply a combination of AI techniques, such as graph theory, social network analysis, and anomaly detection algorithms, to gain a more comprehensive understanding of the environmental landscape, interactions, behaviors, and decision-making processes. For example, graph theory can be used to represent relationships between various agents and sensors, allowing researchers to identify critical agents or influencers, understand the communication structure, and discover emergent patterns. Social network analysis can further enhance the understanding of agent interactions and their impact on the overall system performance.

Anomaly detection algorithms, on the other hand, can be used to identify unusual patterns or behaviors in data that deviate from the norm. This can be crucial for detecting unexpected agent behaviors, sensor malfunctions, or environmental changes that could impact system performance. For example, in the search and rescue scenario, an anomaly detection algorithm could flag an unexpected drop in an agent’s communication frequency, indicating a potential issue with its communication module or environmental interference. By identifying such anomalies, mission commanders may respond by substituting a known good agent and removing the anomalous agent from the operations. Researchers or developers can subsequently investigate underlying causes of the anomalies to address potential issues that improve the system’s future performance and reliability. Integrating new AI methods into the analysis of data stored on the distributed ledger may enable a more in-depth understanding of agent perspectives and behaviors, ultimately enhancing the effectiveness of autonomous multi-agent systems.

Using Ledger Data to Enhance Modeling and Simulation

Leveraging ledger data can significantly enhance modeling and simulation in the analysis of autonomous multi-agent systems (CA2IS). The proposed C-DLT’s recorded transactions offer a granular depiction of various scenarios in real-world environments as they actually occurred. Utilizing this data in simulations introduces authentic variability and complexity, enabling a deeper understanding of emergent behaviors and outcomes. The comprehensive metadata within the transaction ledger can help simulations recreate intricate relationships, temporal sequences, and causal links between actions and reactions. Anomalies captured in the transactions contribute to the development of robust simulations by allowing for the simulation of unexpected events and their corresponding consequences.This capability allows researchers to explore “what-if” scenarios, test hypotheses, and validate the accuracy of simulation outcomes against actual recorded data. By integrating DLT with the modeling and simulation process, a more accurate and realistic analysis of CA2IS can be achieved, enhancing the overall understanding and management of these systems in real-world scenarios.

Figure 5: Using AI to Find Anomalies in the Ledger Data

Discussion

The challenges of testing and evaluating autonomous multi-agent systems (CA2IS) in real-world scenarios are complex and multifaceted. This is particularly true when dealing with systems that exist in the complex domain of the Cynefin framework, where cause-and-effect relationships are only understood in retrospect due to the unpredictable and emergent nature of the system. Traditional testing methods often struggle to effectively evaluate the complex, unpredictable, and adaptable nature of Autonomous Multi-Agent Systems (CA2IS), particularly in dynamic environments. To address this, our study proposes the use of Consensus Distributed Ledger Technology (C-DLT) as a novel and efficient framework for in-situ testing of CA2IS. This approach aims to capture real-world contexts and emergent behaviors, thereby overcoming the limitations of traditional validation methods and providing a more comprehensive evaluation of system performance in its actual operational environment. In addition to traditional testing methods, which are essential for evaluating individual components and subsystems, our approach incorporates in-situ testing. This focuses on the emergent behaviors and interactions of the entire system within its intended operational environment. We believe that this holistic approach ensures that the complexities and challenges posed by CA2IS are adequately addressed, leading to a more robust, reliable, and efficient system capable of effectively operating in complex, real-world scenarios.Our proposed C-DLT plays a crucial role in facilitating real-time data collection and synchronization during in-situ testing. This significantly contributes to achieving a close alignment with a global reference model, a critical aspect for assessing how well the system conforms to established standards and expectations. Moreover, the C-DLT’s secure and transparent data sharing capabilities enhance collaboration by providing a single, trustworthy source of data for all stakeholders. The detailed transactions recorded by the C-DLT offer a granular depiction of various real-world scenarios. This data can be used to enhance modeling and simulation in the analysis of CA2IS, enabling a deeper understanding of emergent behaviors and outcomes. The proposed C-DLT approach provides robust data logging and recording for subsequent post-test off-line analysis, and support modeling and simulation scenarios. Each test event potentially becomes a source of new training data, continually improving system performance and potentially expanding system capabilities.

While the proposed C-DLT offers numerous benefits for in-situ testing of CA2IS, several challenges and limitations need to be addressed when implementing this technology. Some of these challenges include scalability, privacy, and the potential need for standardized protocols or data formats.

Scalability is a critical challenge when using the C-DLT in the context of in-situ testing, especially when dealing with large-scale multi-agent systems that generate vast amounts of data. As the number of agents and the volume of data increase, the computational and storage requirements for the distributed ledger grow correspondingly. This can lead to increased latency and decreased performance, particularly in situations where real-time data processing and decision-making are crucial. To address this challenge, researchers and practitioners need to explore novel approaches to optimizing the scalability of DLT.

Privacy is another concern when using DLT for in-situ testing, as sensitive information about the agents, their actions, and the environment may be stored on the ledger. Controlling data access to ensuring the confidentiality and privacy of this data is essential, particularly when multiple organizations are involved and compliance with data access permissions and authorities is demanded.

The potential need for standardized protocols and data formats presents another challenge. With numerous organizations and agents involved in testing, each may utilize different technologies, protocols, or data formats. Ensuring effective communication and interaction among these heterogeneous agents requires the establishment of standardized protocols and data formats that can be understood and processed by all parties. Developing and adopting such standards can be a complex and time-consuming process, often requiring collaboration and consensus-building among various stakeholders. However, achieving this standardization is crucial for ensuring interoperability and efficiency.

Conclusion

Consensus distributed ledger technology (C-DLT) offers the potential to address challenges associated with testing and evaluating the performance of collaborative robotics and sensor systems in autonomous multi-agent systems (CA2IS). By facilitating data collection and synchronization during real-time in-situ testing, the proposed C-DLT significantly contributes to achieving proximity of fit to a global reference model.This paper presents a theoretical framework and a hypothetical case study (supported by initial feasibility experiments as referenced). The actual implementation of C-DLT in testing and evaluating CA2IS may present additional challenges and limitations not covered in this paper. For instance, issues related to scalability, privacy, and the need for standardized protocols or data formats could arise. In addition, the effectiveness of C-DLT in capturing and managing the vast and varied data inputs from diverse conditions and numerous sensors remains to be empirically tested. Therefore, future research should continue exploring C-DLT applications in testing and evaluation, investigating potential challenges and limitations, and developing a unified architecture and standards for testbeds. Applied research investigating the utility of the C-DLT in real-world testing scenarios is particularly crucial.

Examining the proposed approach’s application in other industries and use cases, such as healthcare, transportation, and smart cities, could further demonstrate its broader implications and potential impact.

Future research should delve into the concept of digital models, shadows, and twins in the context of AI-enabled complex adaptive systems (CA2IS). These digital representations have their limitations, and the application of Consensus Distributed Ledger Technology (C-DLT) could potentially extend their capabilities. C-DLT, with its ability to capture real-time transactions among multiple agents within a common environment, could enhance these digital representations by providing a comprehensive, synchronized record of the system’s behavior in its actual operational environment. This effectively expands the concept of digital twins to a system-wide level. By leveraging the proposed C-DLT to address challenges associated with observing and analyzing collaborative robotics and sensor systems from a distance and integrating this data into an enhanced global frame of reference model, the proposed approach represents a significant advancement in achieving optimal performance and alignment with the requirements of CA2IS modeling. This innovative approach holds the promise of transforming the testing and evaluation landscape, paving the way for more robust, reliable, and efficient systems capable of effectively operating in complex, real-world scenarios.

References

Ahner, D. K., Parson, C. R., Thompson, J. L., & Rowell, W. F. (2018). Overcoming the challenges in test and evaluation of autonomous robotic systems. The ITEA Journal of Test and Evaluation , 39 , 86-94.

Arnold, T., & Scheutz, M. (2018). The “big red button” is too late: an alternative model for the ethical evaluation of AI systems. Ethics and Information Technology , 20 , 59-69.

Baldoni, R., Bonomi, S., Platania, M., & Querzoni, L. (2012, May). Dynamic message ordering for topic-based publish/subscribe systems. In 2012 IEEE 26th International Parallel and Distributed Processing Symposium (pp. 909-920). IEEE.

Buzachis, A., Celesti , A., Galletta, A., Fazio, M., Fortino, G., & Villari, M. (2020). A multi-agent autonomous intersection management (MA-AIM) system for smart cities leveraging edge-of-things and Blockchain. Information Sciences , 522 , 148-163.

Chen, J., Sun, J., & Wang, G. (2022). From unmanned systems to autonomous intelligent systems. Engineering , 12 , 16-19.

de Bruijn, H., Warnier, M., & Janssen, M. (2022). The perils and pitfalls of explainable AI: Strategies for explaining algorithmic decision-making. Government information quarterly , 39 (2), 101666.

De Lima Filho, G. M., Kuroswiski, A. R., Medeiros, F. L. L., Voskuijl, M., Monsuur, H., & Passaro, A. (2022). Optimization of unmanned air vehicle tactical formation in war games. IEEE Access , 10 , 21727-21741.

Delavarpour, N., Koparan, C., Nowatzki, J., Bajwa, S., & Sun, X. (2021). A technical study on UAV characteristics for precision agriculture applications and associated practical challenges. Remote Sensing , 13 (6), 1204.

Feraru, V. A., Andersen, R. E., & Boukas , E. (2020, November). Towards an autonomous UAV-based system to assist search and rescue operations in man overboard incidents. In 2020 IEEE international symposium on safety, security, and rescue robotics (SSRR) (pp. 57-64). IEEE.

Gorbunova, M., Masek, P., Komarov, M., & Ometov , A. (2022). Distributed ledger technology: State-of-the-art and current challenges. Computer Science and Information Systems , 19 (1), 65-85.

Happ, D., & Wolisz, A. (2016, November). Limitations of the Pub/Sub pattern for cloud based IoT and their implications. In 2016 Cloudification of the Internet of Things (CIoT) (pp. 1-6). IEEE.

Haugh, B. A., Sparrow, D. A., & Tate, D. M. (2018). Status of Test, Evaluation, Verification, and Validation (TEV&V) of Autonomous Systems (p. 32). Institute for Defense Analyses.

Hobson, Sandra, SERC TALKS: How Is T&E Transforming to Adequately Assess DOD Systems in Complex… Environments? – YouTube, accessed April 2023, https://www.youtube.com/watch?v=zu1WBARmCPc

Jiang, W. C., Narayanan, V., & Li, J. S. (2020). Model learning and knowledge sharing for cooperative multiagent systems in stochastic environment. IEEE transactions on cybernetics , 51 (12), 5717-5727.

Kordy, B., Piètre-Cambacédès , L., & Schweitzer, P. (2014). DAG-based attack and defense modeling: Don’t miss the forest for the attack trees. Computer science review , 13 , 1-38.

Koutsoukos, Xenofon, et al. “Pre-curser for Fully Distributed Control of Powergrids.” 6 Feb. 2023. Manuscript for Unpublished Work.

Kurtz, C. F., & Snowden, D. J. (2003). The new dynamics of strategy: Sense-making in a complex and complicated world. IBM systems journal, 42(3), 462-483.

Lanus, E., Hernandez, I., Dachowicz, A., Freeman, L. J., Grande, M., Lang, A., … & Welch, S. (2021, June). Test and evaluation framework for multi-agent systems of autonomous intelligent agents. In 2021 16th International Conference of System of Systems Engineering (SoSE) (pp. 203-209). IEEE.

Mitola, Joseph, III, SERC TALKS: How Can We Systems Engineer Trust into Increasingly Autonomous Cyber-Physical Systems? – YouTube, accessed April 2023, https://www.youtube.com/watch?v=gvNHr4_m1hY

National Science and Technology Council (US). Cyber Security and Information Assurance Interagency Working Group, Networking and Information Technology Research and Development Subcommittee. Federal Cybersecurity Research and Development Strategic Plan. Executive Office of the President of the United States, 2023, Federal-Cybersecurity-RD-Strategic-Plan-2023 (whitehouse.gov) .

Porter, D. J., & Dennis, J. W. (2020). Test & evaluation of AI-enabled and autonomous systems: A literature review.

Quan, W., Jia, L., Zhang, Z., Chen, C., & Wang, L. (2023, October). A Multi-Dimensional Dynamic Evaluation Method for the Intelligence of Unmanned Aerial Vehicle Swarm. In 2023 IEEE International Conference on Unmanned Systems (ICUS) (pp. 731-737). IEEE.

Reese, M. (2018). The proximity principle. In Elgar Encyclopedia of Environmental Law (pp. 219-233). Edward Elgar Publishing.

Tate, D. M., & Sparrow, D. A. (2018). Acquisition Challenges of Autonomous Systems.

Tolk, A. (2019). Limitations and usefulness of computer simulations for autonomous multi-agent systems research. In Summer of Simulation: 50 Years of Seminal Computer Simulation Research (pp. 77-96). Springer International Publishing.

Bibliography

Ahner, D. K., Parson, C. R., Thompson, J. L., & Rowell, W. F. (2018). Overcoming the challenges in test and evaluation of autonomous robotic systems. The ITEA Journal of Test and Evaluation , 39 , 86-94.

Arnold, T., & Scheutz, M. (2018). The “big red button” is too late: an alternative model for the ethical evaluation of AI systems. Ethics and Information Technology , 20 , 59-69.

Baldoni, R., Bonomi, S., Platania, M., & Querzoni, L. (2012, May). Dynamic message ordering for topic-based publish/subscribe systems. In 2012 IEEE 26th International Parallel and Distributed Processing Symposium (pp. 909-920). IEEE.

Buzachis, A., Celesti , A., Galletta, A., Fazio, M., Fortino, G., & Villari, M. (2020). A multi-agent autonomous intersection management (MA-AIM) system for smart cities leveraging edge-of-things and Blockchain. Information Sciences , 522 , 148-163.

Chen, J., Sun, J., & Wang, G. (2022). From unmanned systems to autonomous intelligent systems. Engineering , 12 , 16-19.

Clark, M., Alley, J., Deal, P. J., Depriest, J. C., Hansen, E., Heitmeyer, C., … & Corey, M. (2015). Autonomy community of interest (coi) test and evaluation, verification and validation (tevv) working group: Technology investment strategy 2015-2018. Office of the Assistant Secretary of Defense for Research and Engineering TR.

Dahmann, J., Lane, J. A., Rebovich, G., & Lowry, R. (2010, June). Systems of systems test and evaluation challenges. In 2010 5th International Conference on System of Systems Engineering (pp. 1-6). IEEE.

de Bruijn, H., Warnier, M., & Janssen, M. (2022). The perils and pitfalls of explainable AI: Strategies for explaining algorithmic decision-making. Government information quarterly , 39 (2), 101666.

De Lima Filho, G. M., Kuroswiski, A. R., Medeiros, F. L. L., Voskuijl, M., Monsuur, H., & Passaro, A. (2022). Optimization of unmanned air vehicle tactical formation in war games. IEEE Access , 10 , 21727-21741.

Delavarpour, N., Koparan, C., Nowatzki, J., Bajwa, S., & Sun, X. (2021). A technical study on UAV characteristics for precision agriculture applications and associated practical challenges. Remote Sensing , 13 (6), 1204.

Diller, A. C., & Fredericks, E. M. (2022, May). Towards run-time search for real-world multi-agent systems. In Proceedings of the 15th Workshop on Search-Based Software Testing (pp. 14-15).

Drew, D. S. (2021). Multi-agent systems for search and rescue applications. Current Robotics Reports, 2, 189-200.

Feraru, V. A., Andersen, R. E., & Boukas , E. (2020, November). Towards an autonomous UAV-based system to assist search and rescue operations in man overboard incidents. In 2020 IEEE international symposium on safety, security, and rescue robotics (SSRR) (pp. 57-64). IEEE.

Gorbunova, M., Masek, P., Komarov, M., & Ometov , A. (2022). Distributed ledger technology: State-of-the-art and current challenges. Computer Science and Information Systems , 19 (1), 65-85.

Happ, D., & Wolisz, A. (2016, November). Limitations of the Pub/Sub pattern for cloud based IoT and their implications. In 2016 Cloudification of the Internet of Things (CIoT) (pp. 1-6). IEEE.

Haugh, B. A., Sparrow, D. A., & Tate, D. M. (2018). Status of Test, Evaluation, Verification, and Validation (TEV&V) of Autonomous Systems (p. 32). Institute for Defense Analyses.

Hobson, Sandra, SERC TALKS: How Is T&E Transforming to Adequately Assess DOD Systems in Complex… Environments? – YouTube, accessed April 2023, https://www.youtube.com/watch?v=zu1WBARmCPc

James, J., Hawthorne, D., Duncan, K., St. Leger, A., Sagisi, J., & Collins, M. (2019, November). An experimental framework for investigating hashgraph algorithm transaction speed. In Proceedings of the 2nd Workshop on Blockchain-enabled Networked Sensor (pp. 15-21).

Jiang, W. C., Narayanan, V., & Li, J. S. (2020). Model learning and knowledge sharing for cooperative multiagent systems in stochastic environment. IEEE transactions on cybernetics , 51 (12), 5717-5727.

Kordy, B., Piètre-Cambacédès , L., & Schweitzer, P. (2014). DAG-based attack and defense modeling: Don’t miss the forest for the attack trees. Computer science review , 13 , 1-38.

Koutsoukos, Xenofon, et al. “Pre-curser for Fully Distributed Control of Powergrids.” 6 Feb. 2023. Manuscript for Unpublished Work.

Kurtz, C. F., & Snowden, D. J. (2003). The new dynamics of strategy: Sense-making in a complex and complicated world. IBM systems journal, 42(3), 462-483.

Lanus, E., Hernandez, I., Dachowicz, A., Freeman, L. J., Grande, M., Lang, A., … & Welch, S. (2021, June). Test and evaluation framework for multi-agent systems of autonomous intelligent agents. In 2021 16th International Conference of System of Systems Engineering (SoSE) (pp. 203-209). IEEE.

Mahela, O. P., Khosravy, M., Gupta, N., Khan, B., Alhelou, H. H., Mahla, R., … & Siano, P. (2020). Comprehensive overview of multi-agent systems for controlling smart grids. CSEE Journal of Power and Energy Systems, 8(1), 115-131.

Mitola, Joseph, III, SERC TALKS: How Can We Systems Engineer Trust into Increasingly Autonomous Cyber-Physical Systems? – YouTube, accessed April 2023, https://www.youtube.com/watch?v=gvNHr4_m1hY

National Academies of Sciences, Engineering, and Medicine. (2023). Test and Evaluation Challenges in Artificial Intelligence-Enabled Systems for the Department of the Air Force.

National Academies of Sciences, Engineering, and Medicine. (2021). Necessary DoD Range Capabilities to Ensure Operational Superiority of US Defense Systems: Testing for the Future Fight .

National Science and Technology Council (US). Cyber Security and Information Assurance Interagency Working Group, Networking and Information Technology Research and Development Subcommittee. Federal Cybersecurity Research and Development Strategic Plan. Executive Office of the President of the United States, 2023, Federal-Cybersecurity-RD-Strategic-Plan-2023 (whitehouse.gov) .

Schmidt, E., Work, R. O., Bajraktari, Y., Catz, S., Horvitz, E. J., Chien, S., … & Moore, A. W. (2021). National Security Commission on artificial intelligence.

Porter, D. J. (2019). Demystifying the black box – A test strategy for autonomy. Paper presented at the DATAWorks 2019, Springfield, VA.

Porter, D. J. (2020). A HellerVVA problem: The Catch-22 for simulated testing of fully autonomous systems. Paper presented at the DATAWorks 2020, COVID Virtual.

Porter, D. J., & Dennis, J. W. (2020). Test & evaluation of AI-enabled and autonomous systems: A literature review.

Quan, W., Jia, L., Zhang, Z., Chen, C., & Wang, L. (2023, October). A Multi-Dimensional Dynamic Evaluation Method for the Intelligence of Unmanned Aerial Vehicle Swarm. In 2023 IEEE International Conference on Unmanned Systems (ICUS) (pp. 731-737). IEEE.

Rainey, L. B., & Holland, O. T. (Eds.). (2022). Emergent Behavior in System of Systems Engineering: Real-World Applications. CRC Press.

Reese, M. (2018). The proximity principle. In Elgar Encyclopedia of Environmental Law (pp. 219-233). Edward Elgar Publishing.

Tate, D. M., & Sparrow, D. A. (2018). Acquisition Challenges of Autonomous Systems.

Tate, D., & Sparrow, D. (2019). Lesson 2: How do autonomous capabilities affect T&E? Autonomous Systems Test & Evaluation – CLE 002: Defense Acquisition University.

Tolk, A. (2019). Limitations and usefulness of computer simulations for autonomous multi-agent systems research. In Summer of Simulation: 50 Years of Seminal Computer Simulation Research (pp. 77-96). Springer International Publishing.

Author Biographies

Stuart Harshbarger is a distinguished technology leader with a deep focus on artificial intelligence (AI) and robotics. Throughout his career, Stuart has held various leadership roles in government and defense R&D programs, with extensive experience in avionics test and evaluation. He directly serves the US Government in respective Technical Director and Innovation Leadership roles. In this latter capacity, he has led several Artificial Intelligence program initiatives and is responsible for promoting best practices for transitioning emerging research outcomes into enterprise operations. He currently leads a portfolio of AI/ML-stem pipeline enrichment activities which strive to accelerate well-informed AI adoption through broad community partnerships with a focus on AI/ML Assurance.

Dr. Rosa Heckle, PhD has over 30 years of experience as a software and systems engineer. For the past 10 years she has maintained a strong understanding of the artificial intelligence (AI) and machine learning ecosystems to effectively drive digital transformation. She provides strategic guidance in the assessment of the desirability, feasibility, and viability of artificial intelligence capabilities. Her specific focus has been the testing and evaluation of AI-enabled systems and is currently supporting several AI Assurance and Responsible AI initiatives.

Michael Collins is a senior researcher with the Laboratory for Advanced Cybersecurity Research at NSA. Current research explores resilient and emergent behaviors in complex systems. The current focus of this research is to establish mathematical foundations of emergence in complex systems with emphasis on detection and influence as related to cybersecurity. Mr. Collins has built his career as a systems security evaluator, designer, and architect, across the US Government, Academia, and the private sector.

- Join us on LinkedIn to stay updated with the latest industry insights, valuable content, and professional networking!